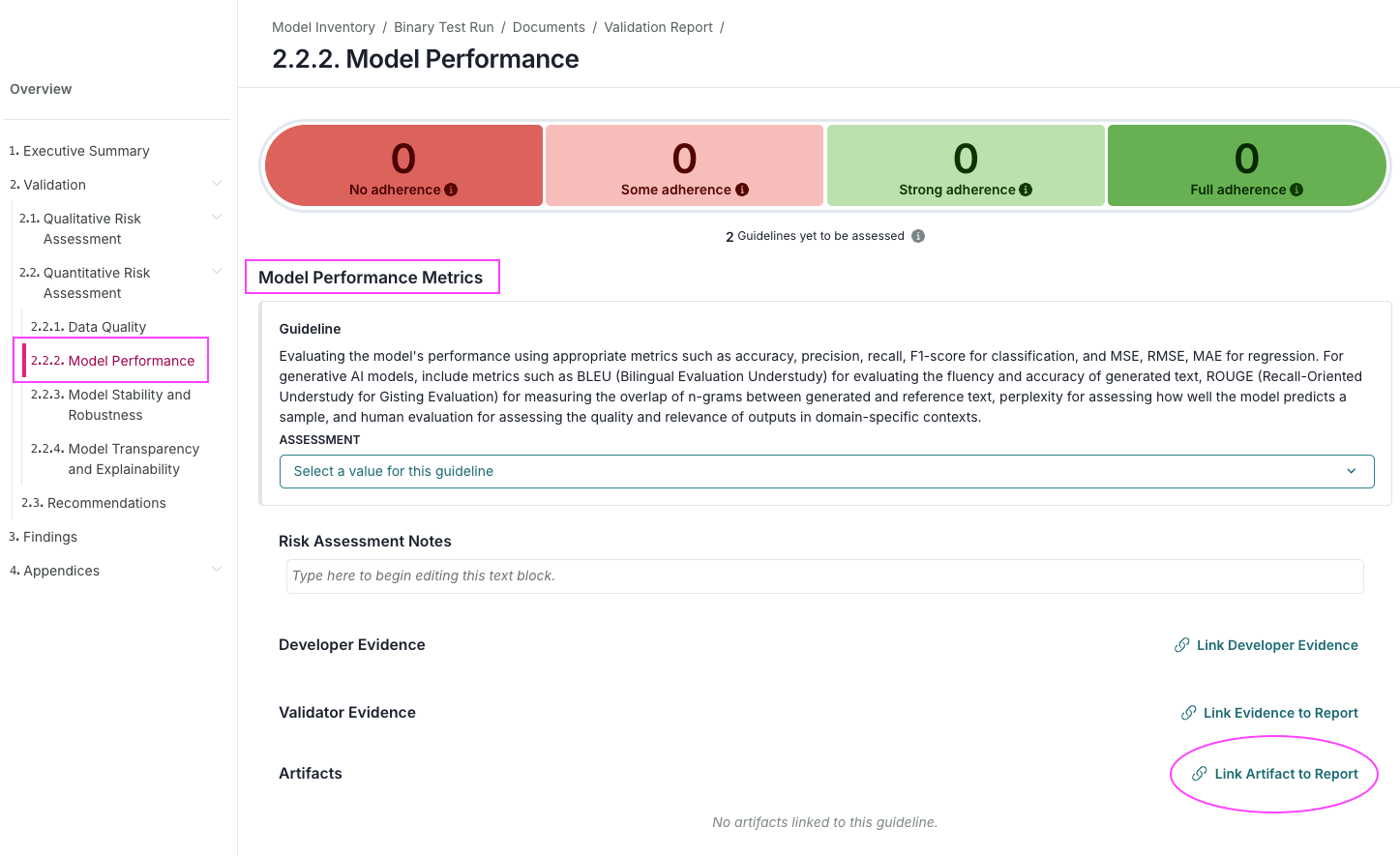

| validmind.model_validation.sklearn.CalibrationCurve |

Calibration Curve |

Evaluates the calibration of probability estimates by comparing predicted probabilities against observed... |

True |

False |

['model', 'dataset'] |

{'n_bins': {'type': 'int', 'default': 10}} |

['sklearn', 'model_performance', 'classification'] |

['classification'] |

| validmind.model_validation.sklearn.ClassifierPerformance |

Classifier Performance |

Evaluates performance of binary or multiclass classification models using precision, recall, F1-Score, accuracy,... |

False |

True |

['dataset', 'model'] |

{'average': {'type': 'str', 'default': 'macro'}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.ConfusionMatrix |

Confusion Matrix |

Evaluates and visually represents the classification ML model's predictive performance using a Confusion Matrix... |

True |

False |

['dataset', 'model'] |

{'threshold': {'type': 'float', 'default': 0.5}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance', 'visualization'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.HyperParametersTuning |

Hyper Parameters Tuning |

Performs exhaustive grid search over specified parameter ranges to find optimal model configurations... |

False |

True |

['model', 'dataset'] |

{'param_grid': {'type': 'dict', 'default': None}, 'scoring': {'type': 'Union', 'default': None}, 'thresholds': {'type': 'Union', 'default': None}, 'fit_params': {'type': 'dict', 'default': None}} |

['sklearn', 'model_performance'] |

['clustering', 'classification'] |

| validmind.model_validation.sklearn.MinimumAccuracy |

Minimum Accuracy |

Checks if the model's prediction accuracy meets or surpasses a specified threshold.... |

False |

True |

['dataset', 'model'] |

{'min_threshold': {'type': 'float', 'default': 0.7}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.MinimumF1Score |

Minimum F1 Score |

Assesses if the model's F1 score on the validation set meets a predefined minimum threshold, ensuring balanced... |

False |

True |

['dataset', 'model'] |

{'min_threshold': {'type': 'float', 'default': 0.5}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.MinimumROCAUCScore |

Minimum ROCAUC Score |

Validates model by checking if the ROC AUC score meets or surpasses a specified threshold.... |

False |

True |

['dataset', 'model'] |

{'min_threshold': {'type': 'float', 'default': 0.5}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.ModelsPerformanceComparison |

Models Performance Comparison |

Evaluates and compares the performance of multiple Machine Learning models using various metrics like accuracy,... |

False |

True |

['dataset', 'models'] |

{} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance', 'model_comparison'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.PopulationStabilityIndex |

Population Stability Index |

Assesses the Population Stability Index (PSI) to quantify the stability of an ML model's predictions across... |

True |

True |

['datasets', 'model'] |

{'num_bins': {'type': 'int', 'default': 10}, 'mode': {'type': 'str', 'default': 'fixed'}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.PrecisionRecallCurve |

Precision Recall Curve |

Evaluates the precision-recall trade-off for binary classification models and visualizes the Precision-Recall curve.... |

True |

False |

['model', 'dataset'] |

{} |

['sklearn', 'binary_classification', 'model_performance', 'visualization'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.ROCCurve |

ROC Curve |

Evaluates binary classification model performance by generating and plotting the Receiver Operating Characteristic... |

True |

False |

['model', 'dataset'] |

{} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance', 'visualization'] |

['classification', 'text_classification'] |

| validmind.model_validation.sklearn.RegressionErrors |

Regression Errors |

Assesses the performance and error distribution of a regression model using various error metrics.... |

False |

True |

['model', 'dataset'] |

{} |

['sklearn', 'model_performance'] |

['regression', 'classification'] |

| validmind.model_validation.sklearn.TrainingTestDegradation |

Training Test Degradation |

Tests if model performance degradation between training and test datasets exceeds a predefined threshold.... |

False |

True |

['datasets', 'model'] |

{'max_threshold': {'type': 'float', 'default': 0.1}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance', 'visualization'] |

['classification', 'text_classification'] |

| validmind.model_validation.statsmodels.GINITable |

GINI Table |

Evaluates classification model performance using AUC, GINI, and KS metrics for training and test datasets.... |

False |

True |

['dataset', 'model'] |

{} |

['model_performance'] |

['classification'] |

| validmind.ongoing_monitoring.CalibrationCurveDrift |

Calibration Curve Drift |

Evaluates changes in probability calibration between reference and monitoring datasets.... |

True |

True |

['datasets', 'model'] |

{'n_bins': {'type': 'int', 'default': 10}, 'drift_pct_threshold': {'type': 'float', 'default': 20}} |

['sklearn', 'binary_classification', 'model_performance', 'visualization'] |

['classification', 'text_classification'] |

| validmind.ongoing_monitoring.ClassDiscriminationDrift |

Class Discrimination Drift |

Compares classification discrimination metrics between reference and monitoring datasets.... |

False |

True |

['datasets', 'model'] |

{'drift_pct_threshold': {'type': '_empty', 'default': 20}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.ongoing_monitoring.ClassificationAccuracyDrift |

Classification Accuracy Drift |

Compares classification accuracy metrics between reference and monitoring datasets.... |

False |

True |

['datasets', 'model'] |

{'drift_pct_threshold': {'type': '_empty', 'default': 20}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.ongoing_monitoring.ConfusionMatrixDrift |

Confusion Matrix Drift |

Compares confusion matrix metrics between reference and monitoring datasets.... |

False |

True |

['datasets', 'model'] |

{'drift_pct_threshold': {'type': '_empty', 'default': 20}} |

['sklearn', 'binary_classification', 'multiclass_classification', 'model_performance'] |

['classification', 'text_classification'] |

| validmind.ongoing_monitoring.ROCCurveDrift |

ROC Curve Drift |

Compares ROC curves between reference and monitoring datasets.... |

True |

False |

['datasets', 'model'] |

{} |

['sklearn', 'binary_classification', 'model_performance', 'visualization'] |

['classification', 'text_classification'] |