Interfaces to support code explainer feature in ValidMind

validmind-library

2.8.26

documentation

enhancement

highlight

This update introduces an experimental feature for text generation tasks within the ValidMind project. It includes interfaces to utilize the code_explainer LLM feature, currently in the experimental namespace to gather feedback.

How to use:

Read the source code as a string:

with open("customer_churn.py", "r") as f: source_code = f.read()Define the input for the

run_tasktask. The input requires two variables in dictionary format:code_explainer_input = { "source_code": source_code, "additional_instructions": """ Please explain the code in a way that is easy to understand. """ }Run the

code_explainertask withgeneration_type="code_explainer":result = vm.experimental.agents.run_task( task="code_explainer", input=code_explainer_input )

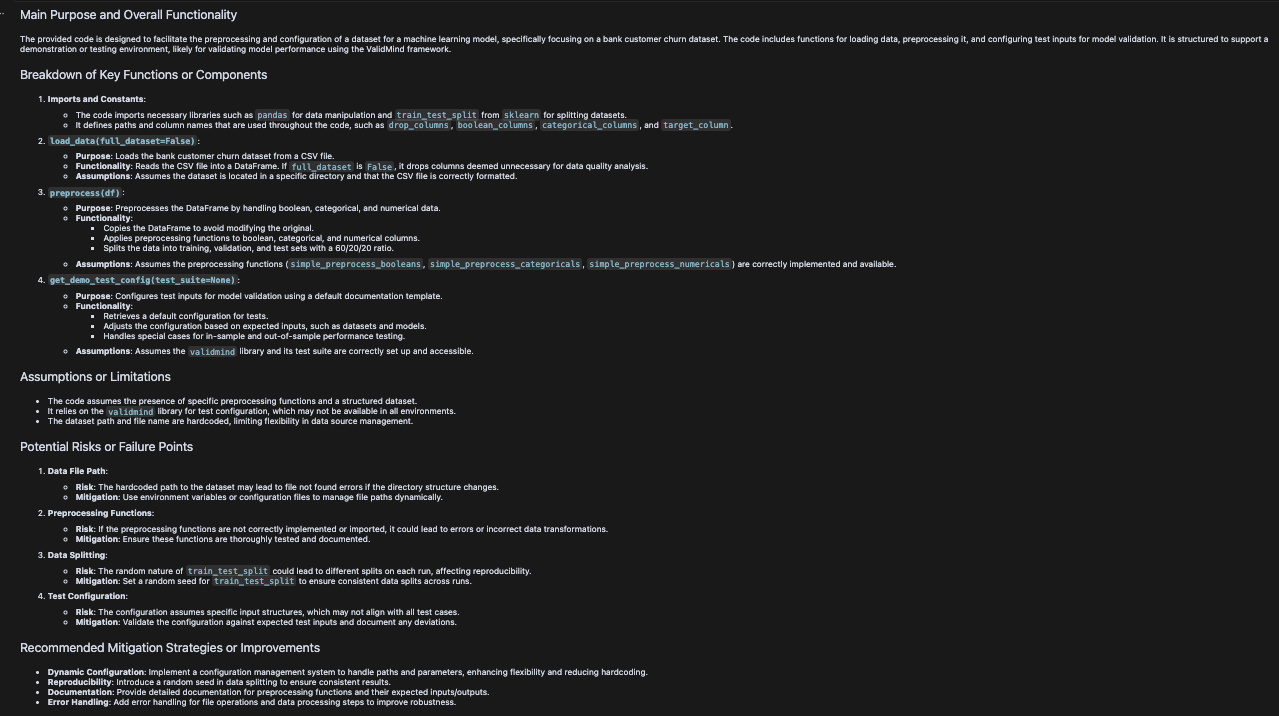

Example Output: